How Claude Code Works, And Why It Matters

Anthropic has recently communicated extensively about everyone’s beloved coding agent’s architecture, giving us the opportunity to pop Claude Code’s hood and see what we can learn from it.

One Loop at a Time

Counterintuitively, while Claude’s research agent uses a sophisticated orchestrator–worker pattern (where a lead agent plans and delegates tasks to subagents in parallel), Claude Code is built around a deceptively simple, single-agent architecture, that can be described as a simple `while(tool_use)` loop with sequential tasks. To elaborate just a little bit: each message includes a tool call to be executed, with results fed back into the model, until the message contains no tool call, at which point the loop stops and the agent waits for user input. Even Michael Scott would understand this, and that’s the beauty of it.

To Claude Code’s team, coding is fundamentally a dependency-driven activity, with each step often relying on prior context. They believe parallelizing adds little benefit and much complexity, while multi-agent orchestration “burns through tokens fast” with minimal added value for coding workflows. As a result, Claude Code avoids using a critic pattern to review its work or adopting different roles. It also lacks a sophisticated memory system and does not rely on databases to represent knowledge or add extra complexity. Instead, its power lies in simplicity, transparency, and fine-grained user control. This quest for simplicity is most obviously reflected in the choice of using a CLI. A minimal but clean TUI arguably part of Claude Code’s popularity.

Two Philosophies Of Coding Agents

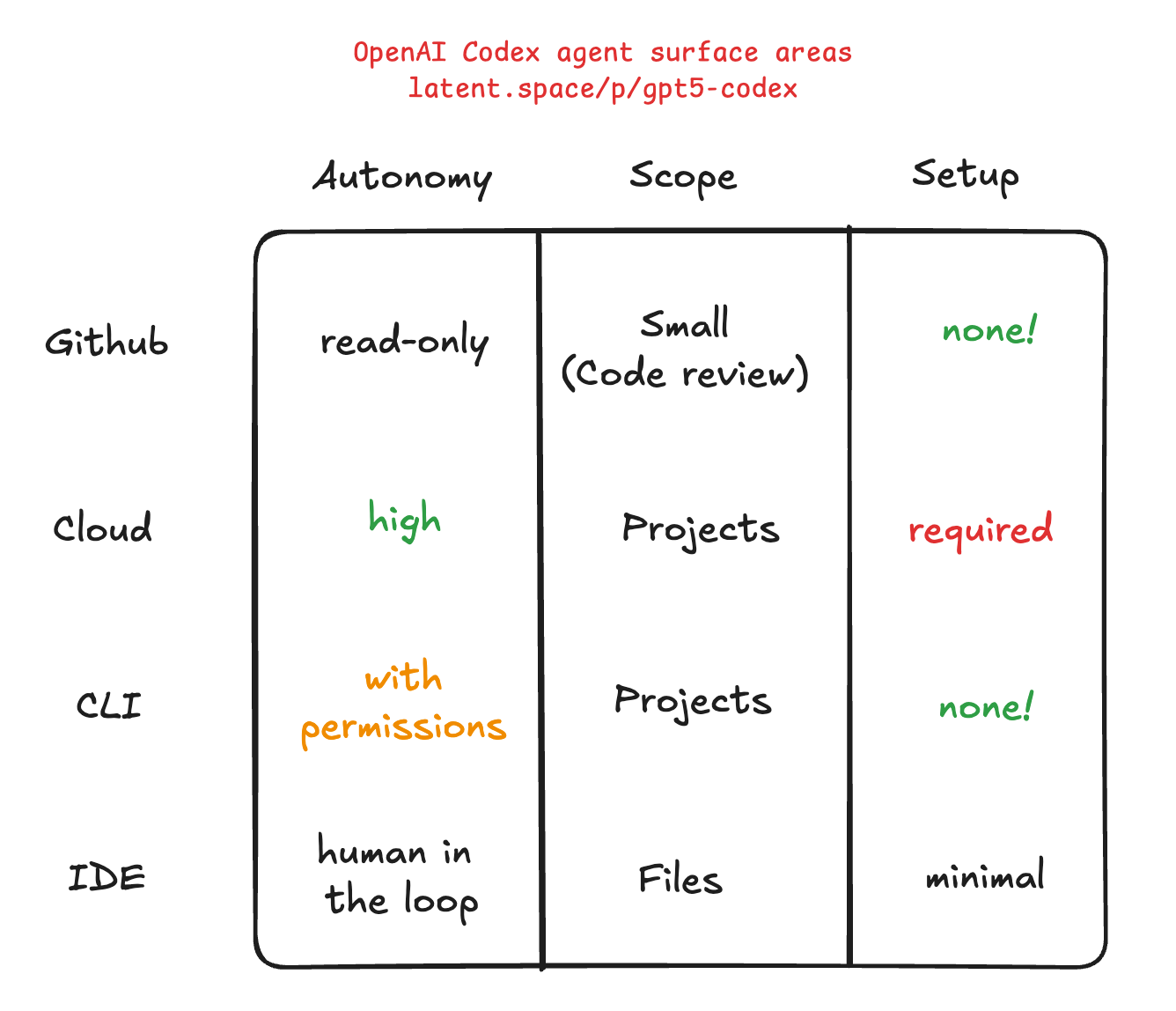

Interestingly, this philosophy is not shared with their main competitors, OpenAI. The new GPT-5 Codex’s architecture has not been detailed yet, but the way they describe it (through interviews or various announcements of their new major release of GPT-5 Codex or their Response API design, advocating for stateful rather than stateless) implies more complexity, and at least some parallelization with agent specialists for some tasks (or the ability for the user to parallelize at different levels, on device or in the cloud). “You don’t want to just have one instance of the model operating. You want to have multiple, right?” said Greg Brockman on the Latent Space podcast. “You want to be a manager not of an agent, but of agents”.

Therefore, GPT-5 Codex pursues broader “agentic software engineering” with embedded multi-turn, multi-step, thinking-with-tools abilities. It positions itself in opposition to the streamlined vision of Claude Code as described in the last Pragmatic Engineer’s newsletter (“How Claude Code is Built”). “There’s not all that much to Claude Code in terms of modules, components, and complex business logic on the client side… just a lightweight shell on top of the Claude model. This is because the model does almost all of the work.”

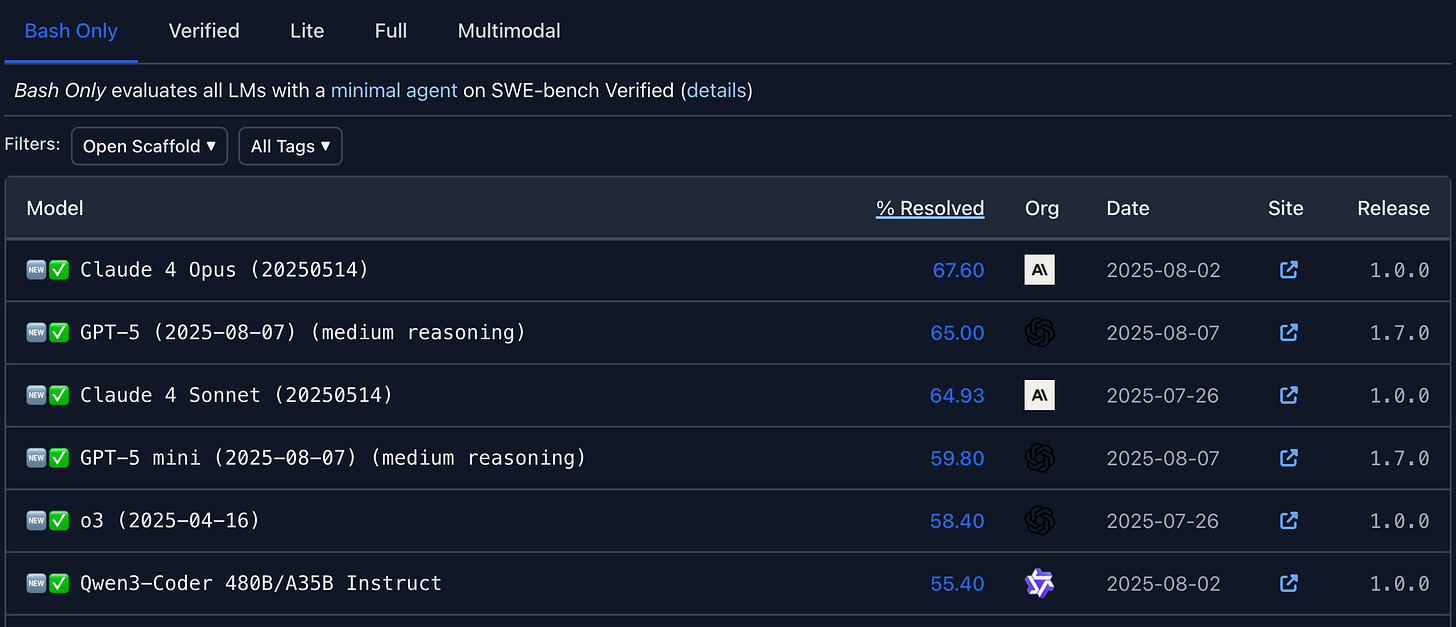

Both approaches, with contrasting philosophies, workflows, and, it seems, architectures, don’t seem to affect the results much. SWE-bench—for what it’s worth—ranks Codex and Claude’s coding models (Opus and Sonnet) in the same state-of-the-art bucket. And from the many discussions I’ve had with engineers, teams tend to use both, but for different purposes, since their architectures lend themselves to different use cases.

How To Use Claude Code

While the last GPT-5 Codex big update is too recent to have a good assessment of all its capabilities, Claude Code has been around long enough to gather field experience. Jannes Klaas recently shared learnings in Agent design lessons from Claude Code that are very much in line with my own experience and observations, and more importantly: aligned with Claude Code’s architecture.

1. Leverage the Loop And Take Things Step by Step

As you might expect with the architecture described above, you should treat Claude Code not as an “all-knowing” oracle, but as a highly capable coworker who excels with clear instructions and visible feedback. Iteratively break problems down; let the agent loop resolve one step, then inspect or guide the next.

2. Use TODO Lists and Visible Planning

Since we know that Claude Code relies heavily on TODO lists, we should embrace this method, and not just prompt the model with goals, but ask it to write out its plan. Then iterate with it, challenge it and make it challenge your assumptions, until you feel you’re on the same page. Because there’s no persistent agent memory or opaque state, the plan has to be explicit. The design forces clarity and keeps the agent’s “thought process” inspectable.

3. Test-Driven, Feedback-Rich Development

It’s a good practice in general you might say, and working with an agent makes it even more relevant: ask Claude to write tests before writing implementation. After each code change, ask it to run/test/review. This looped, test-first mindset lets you catch errors early and gives the agent useful feedback (actual test results and logs). The lack of a critic or reviewer agent means test results and user comments are the agent’s own “teacher.”

4. Keep Context Manageable: Share Just Enough

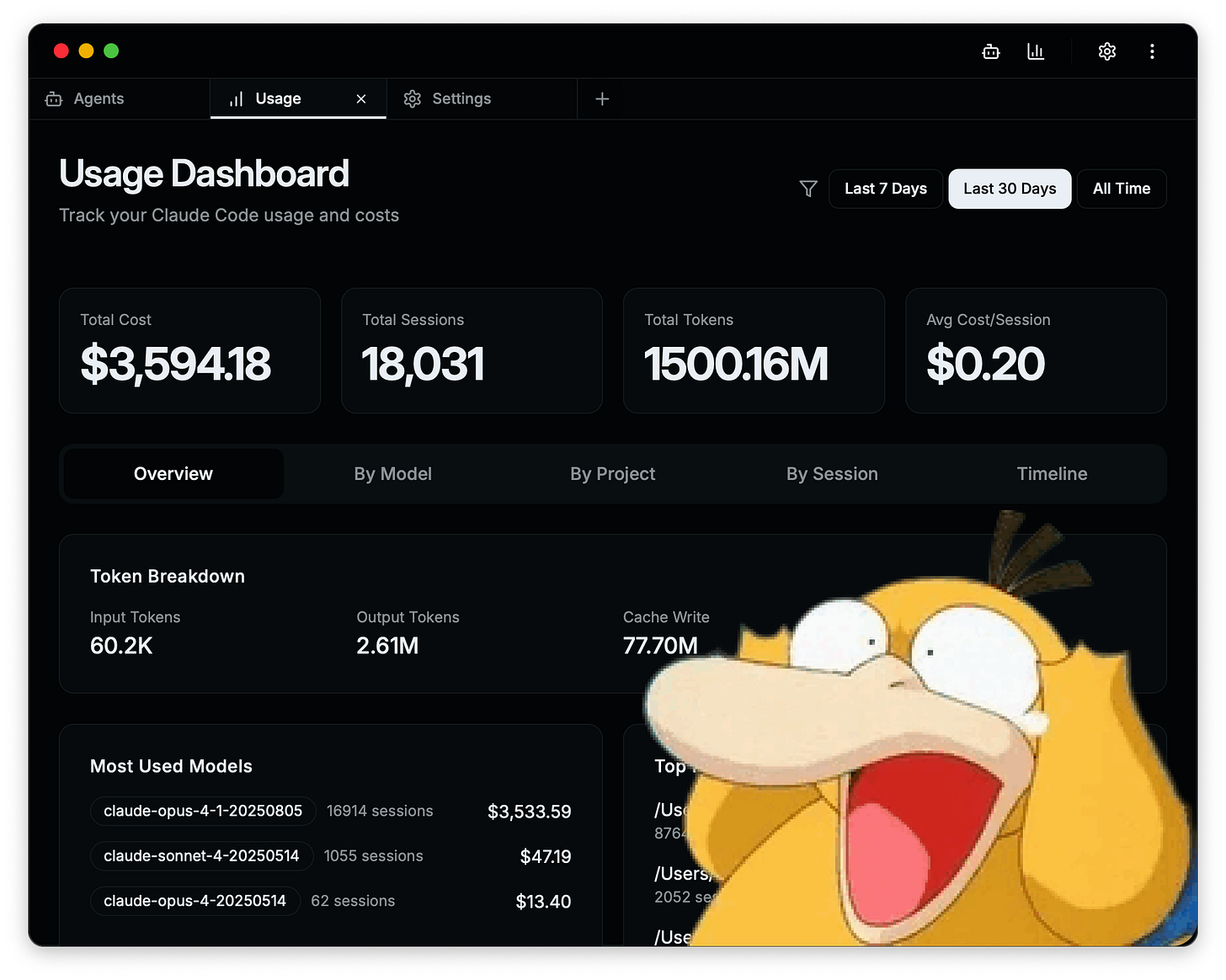

Avoid dumping massive files, folders, or project trees into the context. If you read my post from a few months ago, you might have noticed how huge the number of tokens I consumed was. I believe one of my worst habits was pasting screenshots and full logs, instead of asking Claude Code to “focus” on small, relevant slices (specific files or diffs). This prevents context window overflow and helps the agent stay sharp. As mentioned before, Claude Code doesn’t rely on a vector DB, RAG, or persistent memory; you and the agent must cooperatively curate what matters. The design rewards this discipline by reducing hallucinations and drift.

5. Prompt for Reflection: Ask for Explanations

Make Claude Code explain its reasoning, choices, or planned edits before it acts. Then have it proceed step by step, editing code only once the rationale is clear and vetted. It might feel slower, but trust me, it’s a huge gain overall. Once again, small steps, generating meaningful and precise TODOs, helps a lot in getting accurate results.

6. Stay in the Loop: Engage Frequently

Claude Code is not a “full pipeline” or background agent, but a single-agent loop awaiting explicit user input; ongoing partnership is fundamental to its design. The agent’s state is always what’s visible to you. In contrast with Codex, which aims for long tasks that might last hours, Claude Code favors high-touch, active, ongoing engagement. Don’t “fire and forget.” Check in, review plans, offer new instructions, and course-correct as needed.

An Objective Assessment

Every practice above isn’t just a “trick”: it’s baked into the core architecture of Claude Code. This agent works best when treated not as a “magic” black box, but as a highly visible, collaborative partner, using transparency, explicit plans, and an open conversation to build good software, one loop at a time. I haven’t spent enough time with the new Codex to judge, but my current thought is that the combination of both is great: Claude Code as the daily driver (straightforward and fast), and GPT-5 Codex for more elaborate tasks requiring long reasoning and execution time.

Getting to know more about the architecture behind the full ecosystem of tools around Codex would probably also allow us to use it more efficiently, rather than learning empirically through trial and error. Hopefully, OpenAI will share more soon about how the tools in their coding ecosystem actually work.